Oh, How Adorable: Tech Giants Pretend to Be Confused by Laws

In news that will shock absolutely no one who has spent more than five minutes online, it appears our benevolent social media overlords, Meta and TikTok, may have accidentally overlooked a few minor details in the European Union’s rulebook. The EU, in its ever-optimistic quest to civilize the internet, has released “preliminary findings” suggesting these platforms are struggling with the Digital Services Act (DSA). It’s almost as if multi-billion-dollar corporations find basic compliance to be a dreadful chore. Who could have possibly guessed?

The ‘Digital Services Act for Dummies’ Edition

For those of you who don’t spend your evenings reading European regulatory documents (and I pity those who do), the DSA is the EU’s shiny new attempt to make the internet less of a dumpster fire [1]. Fully rolled out in February 2024, its noble goal is to create a safer digital space, protect users from things like disinformation and manipulation, and ensure our fundamental rights aren’t just a footnote in the terms of service we never read. The biggest players, like Meta and TikTok, are designated “Very Large Online Platforms” and have the most homework to do, including being transparent about how they moderate content [1]. A task they are, apparently, failing with flying colours.

On Today’s Naughty List: Two Major Offenses

The EU’s findings point to a couple of key areas where our digital heroes are falling spectacularly short.

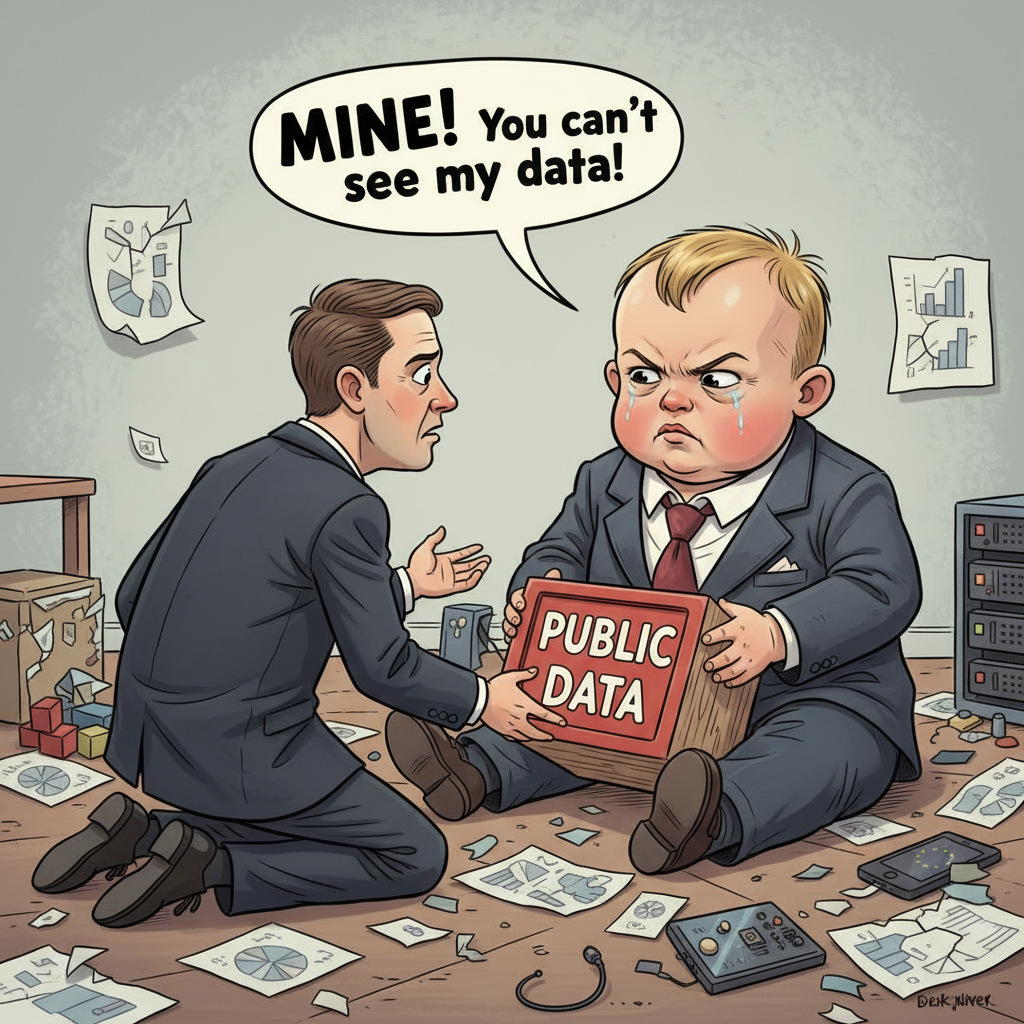

1. Hiding the Data Like It’s a State Secret

First up, both Meta and TikTok have been accused of making it nearly impossible for independent researchers to access public data [2, 3, 4, 5, 6, 7]. You see, these researchers have the audacious goal of studying how these platforms might be, you know, affecting our mental health or exposing children to harmful content. In response, the platforms have created what the EU politely calls “burdensome procedures,” which is bureaucratic speak for “a soul-crushing labyrinth of despair.” TikTok even tried to hide behind GDPR, but the EU wasn’t buying it, reminding them that transparency is, in fact, a core part of the law they’re supposed to be following [4]. It’s a classic case of “You can’t see what’s wrong if we never let you look!”

2. Meta’s ‘Good Luck Reporting That’ Button

This one is a special treat from Meta. The EU found that trying to report illegal content—like, say, child sexual abuse material or terrorist propaganda—on Facebook and Instagram is a masterclass in user-hostile design [2, 3, 4, 8]. The process involves what are described as “unnecessary steps” and “confusing” demands, which are suspected to be deliberate “dark patterns” designed to make you give up and just keep scrolling [2, 4, 8]. And if they wrongfully zap your account? Their appeal system is apparently just as useless [3]. It’s almost like they’d prefer if we didn’t bother them with all the rampant illegality they’re hosting. How inconvenient for them.

The Stakes? Just Billions of Euros

Now, Meta and TikTok have a chance to respond to these “preliminary” accusations. But if the EU’s findings stick, the consequences are more than a slap on the wrist. We’re talking about potential fines of up to 6% of their global annual turnover [4, 5, 14]. For companies this large, that’s not just pocket change; it’s a multi-billion-euro headache.

It seems the era of tech giants operating in their own little unregulated wonderland is drawing to a close, at least in Europe. One can only watch with a bucket of popcorn as they are dragged, kicking and screaming, into the world of accountability. The irony, of course, is that platforms built on sharing every detail of our lives are allegedly fighting tooth and nail to avoid sharing any details of their own operations. You just can’t write this stuff.

Sources (Because Unlike Some Companies, We Cite Our Facts)

- [1] “Digital Services Act,” Wikipedia, https://en.wikipedia.org/wiki/Digital_Services_Act

- [2] “EU finds Meta, TikTok in breach of transparency obligations | Reuters,” Reuters, https://www.reuters.com/sustainability/boards-policy-regulation/eu-preliminarily-finds-meta-tiktok-breach-transparency-obligations-2025-10-24/

- [3] “EU says TikTok and Meta broke transparency rules under landmark tech law,” CNBC, https://www.cnbc.com/2025/10/24/eu-says-tiktok-and-meta-broke-transparency-rules-under-tech-law.html

- [4] “EU finds TikTok and Meta in breach of Digital Services Act transparency rules,” PPC Land, https://ppc.land/eu-finds-tiktok-and-meta-in-breach-of-digital-services-act-transparency-rules/

- [5] “EU preliminarily finds Meta and TikTok breached DSA transparency rules,” Silicon Republic, https://www.siliconrepublic.com/business/meta-tiktok-eu-breach-digital-services-act-commission

- [6] “EU finds Meta and TikTok breached data access and user rights’ rules under the DSA – The Tech Portal,” The Tech Portal, https://thetechportal.com/2025/10/24/eu-finds-meta-and-tiktok-breached-data-access-and-user-rights-rules-under-the-dsa/

- [7] “EU accuses TikTok, Meta of violating transparency rules under digital law – UPI.com,” UPI.com, https://www.upi.com/Top_News/World-News/2025/10/24/Belgium-European-Commission-Union-Digital-Services-Act-Meta-TikTok/1991761310746/

- [8] “EC cites Meta and TikTok for breaching transparency …,” Irish Examiner, https://www.irishexaminer.com/business/companies/arid-41730213.html

- [9] “Transparency into Meta’s Reports To the National …,” Meta Transparency Center, https://transparency.meta.com/ncmec-q2-2023/

- [10] “2024.04.19 SJC Meta Platforms Questions for the Record,” U.S. Senate Committee on the Judiciary, https://www.judiciary.senate.gov/imo/media/doc/2024-01-31_-_qfr_responses_-_zuckerberg1.pdf

- [11] “Facebook terrorist content deletion per quarter 2025,” Statista, https://www.statista.com/statistics/1013864/facebook-terrorist-propaganda-removal-quarter/

- [12] “More Speech and Fewer Mistakes,” Meta Newsroom, https://about.fb.com/news/2025/01/meta-more-speech-fewer-mistakes/

- [13] “Facebook content management controversies,” Wikipedia, https://en.wikipedia.org/wiki/Facebook_content_management_controversies

- [14] “Meta And TikTok Face Record EU Fines Over DSA Breaches,” Evrim Ağacı, https://evrimagaci.org/gpt/meta-and-tiktok-face-record-eu-fines-over-dsa-breaches-512978

Leave a Reply